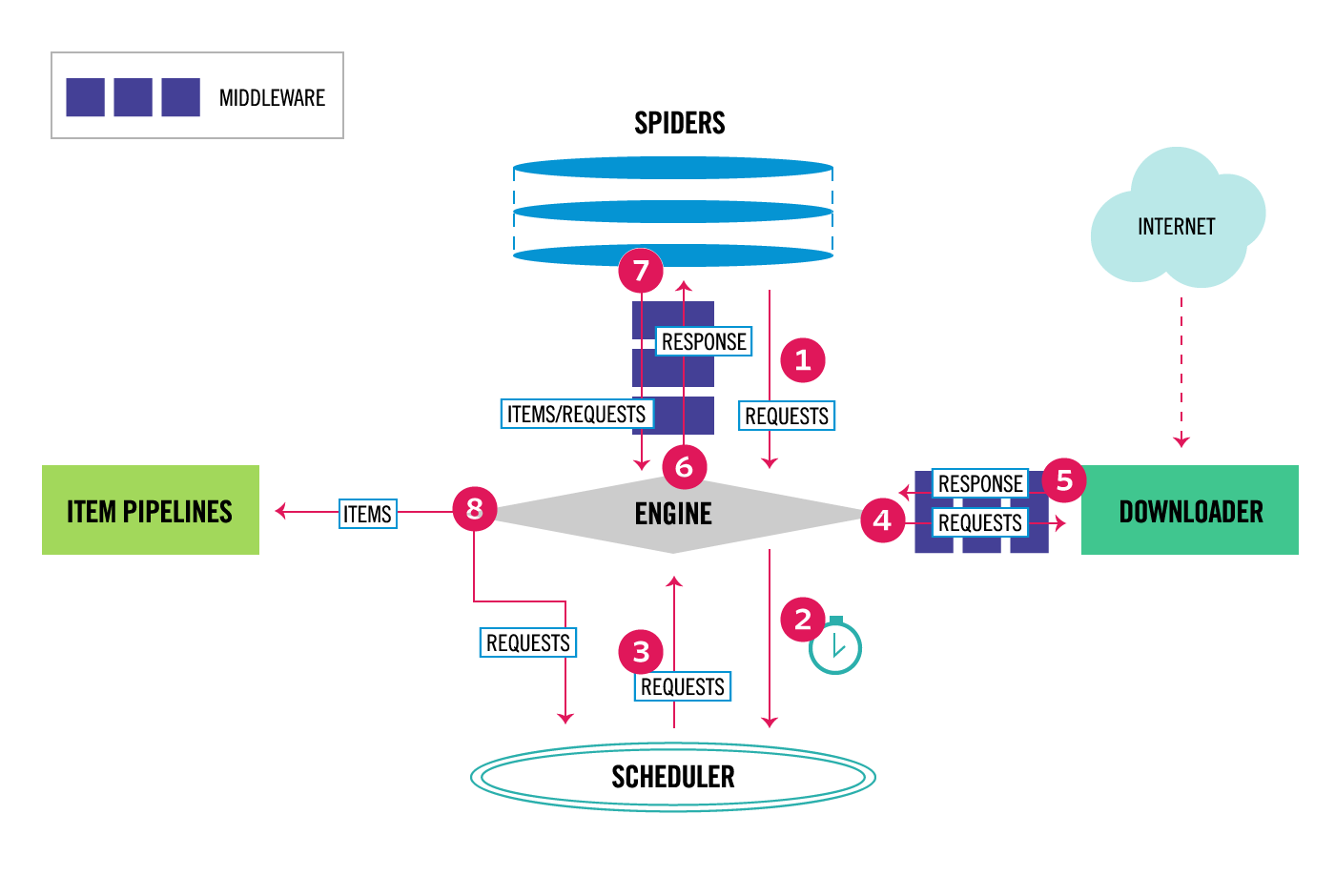

spideryield一个request对象给engineengine将request直接给scheduler。从代码看是调用了scheduler的enqueue_request方法。而scheduler则会把request放到队列里面1

2

3

4

5

6

7# engine中的schedule方法

def schedule(self, request, spider):

self.signals.send_catch_log(signal=signals.request_scheduled,

request=request, spider=spider)

if not self.slot.scheduler.enqueue_request(request):

self.signals.send_catch_log(signal=signals.request_dropped,

request=request, spider=spider)engine通过_next_request_from_scheduler从scheduler处(调用了scheduler的next_request)拿到下一个request- 与5一起分析

engine将request交给downloader下载,该阶段可以添加downloadermiddlewares,其中process_request是下载之前执行的函数,process_response是下载之后执行的函数。例如,可以利用middleware实现随机切换User-Agent,设置代理ip,使用selenium下载动态网页等1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33# 随机切换user-agent

from fake_useragent import UserAgent

class RandomUserAgentMiddleware(object):

def __init__(self, crawler):

super(RandomUserAgentMiddleware, self).__init__()

self.ua = UserAgent()

self.ua_type = crawler.settings.get("RANDOM_UA_TYPE", "random")

def from_crawler(cls, crawler):

return cls(crawler)

def process_request(self, request, spider):

def get_ua():

return getattr(self.ua, self.ua_type)

request.headers.setdefault("User-Agent", get_ua())

# 设置代理IP

class RandomProxyMiddleware(object):

def process_request(self, request, spider):

get_ip = GetIP()

request.meta["proxy"] = get_ip.get_random_ip()

# 使用selenium下载动态网页

class JSPageMiddleware(object):

def process_request(self, request, spider):

browser = webdriver.Chrome(executable_path="e:/soft/selenium/chromedriver.exe")

browser.get(request.url)

time.sleep(3)

print("访问:{0}".format(request.url))

# 不发送到下载器,直接返回给spider

return HtmlResponse(url=spider.browser.current_url, body=spider.browser.page_source, encoding="utf-8", request=request)与7一起分析

engine将response交给spider处理,该阶段可以添加spidermiddlewares,其中process_spider_input是将返回结果交给spider之前执行的函数,process_spider_output是spider处理完后执行的函数。engine根据spider返回的不同类型决定下一步,如果是request就跳到第二步,如果是item,就交给pipelines